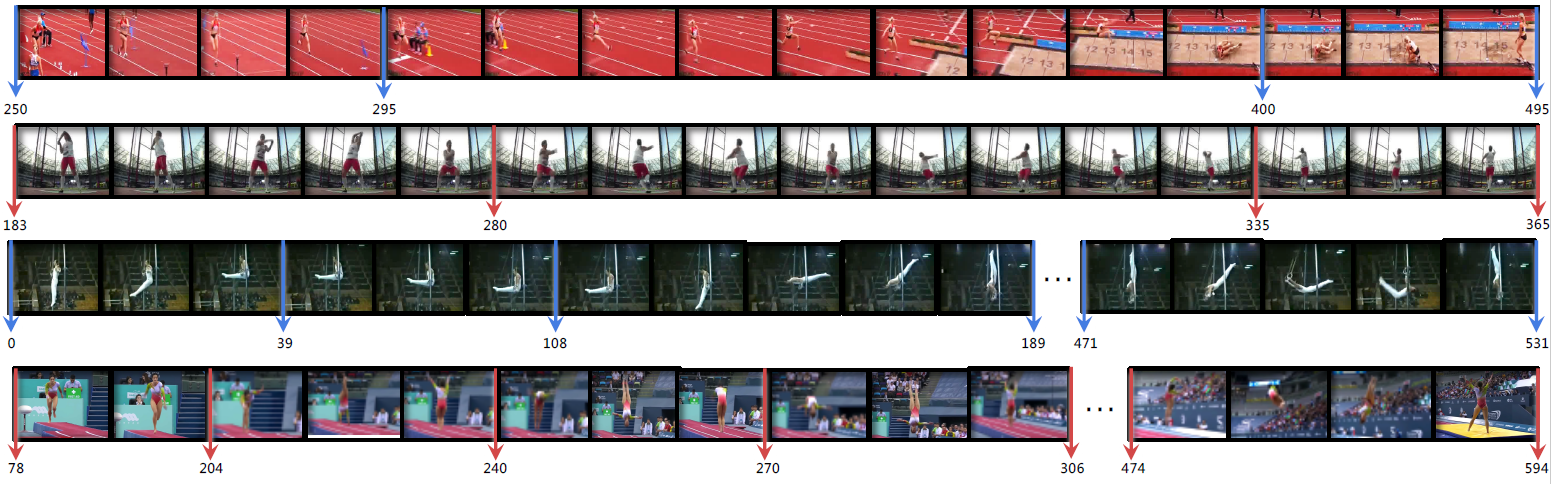

How to read the temporal annotation files (JSON)?

Below, we show an example entry from the above JSON annotation file:

{

"yMK2zxDDs2A": { # youtube_id of this video

"s00004_0_100_7_931": { # action_id: this action is in shot4 ranging from 0.1s to 7.931s

"action": 11, # action-level label

"substages": [ # sub-action boundaries

0,

79,

195

],

"total_frames": 195, # total frame of thie action instance

"shot_timestamps": [ # absolute temporal location of shot4 (s00004) within this video

43.36,

53.48

],

"subset": "train" # train or validation

}, .....